Having a brain that still functions well enough and a ready stock of real-world experiences, I am not a frequent flier to AI destinations, but a confusion that happened (to someone else) the other day prompted me to see if ChatGPT would have been any less confused than something I witnessed in the real world.

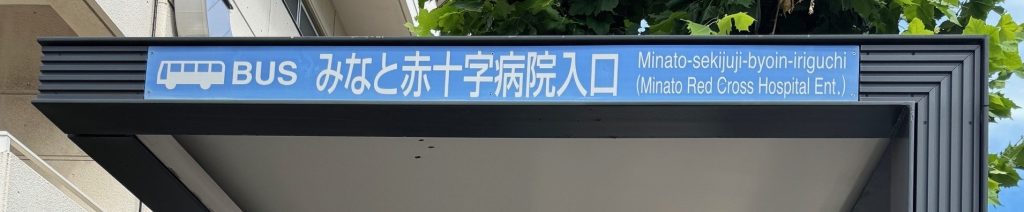

I was sitting waiting for a bus at the みなと赤十字病院入口 (Minato Red Cross Hospital Entrance) bus stop, when I saw a clearly confused young foreign woman looking alternately at her mobile phone and the name of the bus stop at which she had just gotten off a bus.

Seeing her confusion and that she was on crutches, I asked her where she was looking to go.

She pointed to the name of the bus stop and was wondering why she couldn’t see the hospital. Where were they hiding what was surely a substantial medical facility? The answer to the mystery lies in the way bus stops are sometimes named, and in the fact that other buses make a stop at a bus stop named みなと赤十字病院, without the 入口, which means entrance and is located right at the entrance to the hospital. Why does is a bus stop named XYZ Entrance not at the entrance and one without “Entrance” at the actual entrance to XYZ?

The Entrance in the bus stop name refers to it being at the entrance to the road leading to the hospital and shared by at least three bus routes that do not go to the hospital entrance itself.

Would ChatGPT, in all it’s wisdom, have solved this problem before forcing someone on crutches to wait for yet another bus or walk what would be a considerable and perhaps annoying distance on crutches?

My prompt to ChatGPT:

There is a bus stop in Yokohama with the name 赤十字病院入口. Where is that bus stop?

Here’s what it came back with:

The bus stop 赤十字病院入口 (正確には「みなと赤十字病院入口」) is located in Yokohama’s Naka Ward, serving the Yokohama Minato Red Cross Hospital.

[Wow, it could give me the formal name of the hospital !]

Here’s the detailed information:

- Address: 神奈川県横浜市中区新山下3丁目15 (or nearby 3-1–6)

[I guess that’s right, but didn’t check]

- Coordinates: approximately 35.436557, 139.661561.

[Who cares, unless you are planning on dropping a hospital-buster bomb on the place?]

- It’s situated along the bus routes that connect Yokohama / Sakuragicho / Motomachi-Chukagai stations to the hospital. [Correct, except that there is no Motomachi-Chukagai bus stop on some of the buses stopping where this occurred.] The stop is right by the hospital entrance. [Wrong. It was fooled just like the young lady on crutches. It’s nowhere near being “right by the hospital entrance.”]

ChatGPT proved that, just as a human without knowledge or experience regarding how bus stops could be named, it could mistake the name as being literally descriptive of its location.

Of course, I suspected that it would be fooled and knew the reason for the confusion.

People in the neighborhood who might frequent the hospital and have more general real-world experience riding buses (something that ChatGPT does not have and never will have) realize that the hospital entrance bus stop is not at the hospital entrance. The hospital stop is at the hospital, and the hospital entrance stop is about a five-minute walk away.

Most of them probably sense that bus stop as being right at the entrance to a Manchinro shop, at which I frequently buy shumai, which is what I had just done when I encountered the confused visitor.